Quadcopter

VIO quadcopter for FOV-constrained precision landing

Corresponding thesis: PDF, slides

Documentation: vioquad_doc

Code: vioquad_land

Overview

This project addresses vision-based precision landing of a quadcopter. Inadequate visibility of the landing pad can cause landing inaccuracy, collisions, and a decrease in safety for bystanders. To improve landing accuracy, trajectory constraints are designed to ensure the down-facing onboard camera keeps the landing pad in it’s field-of-view (FOV).

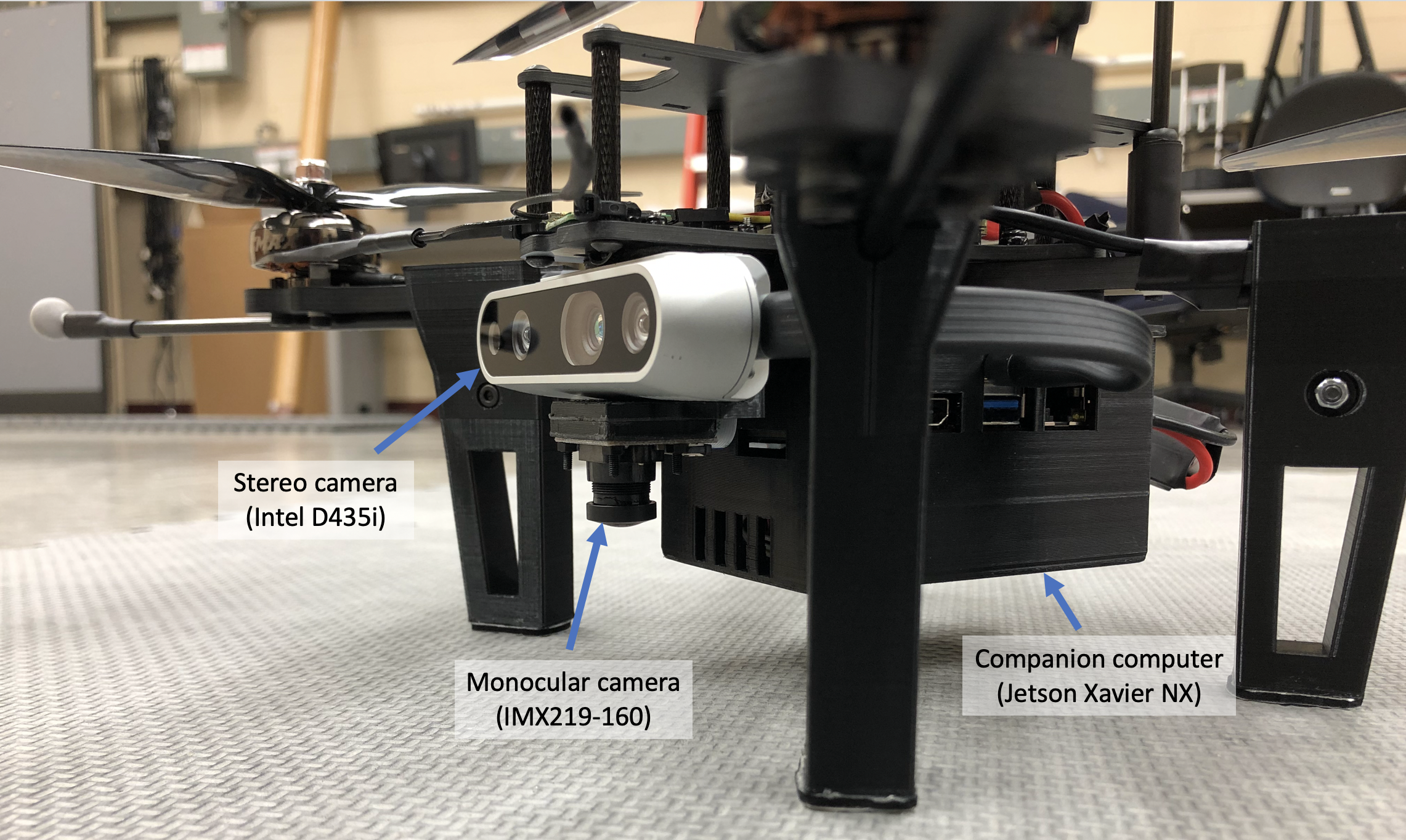

Hardware

This quadcopter was designed to use an Intel RealSense D435i stereo camera (front-facing) for visual-inertial odometry (VIO) while a monocular IMX219-160 camera (down-facing) is used to detect the landing pad. The companion computer (CC) consists of a NVIDIA Jetson Xavier NX and the flight control unit (FCU) is the Kakute H7 v2.

Implementation

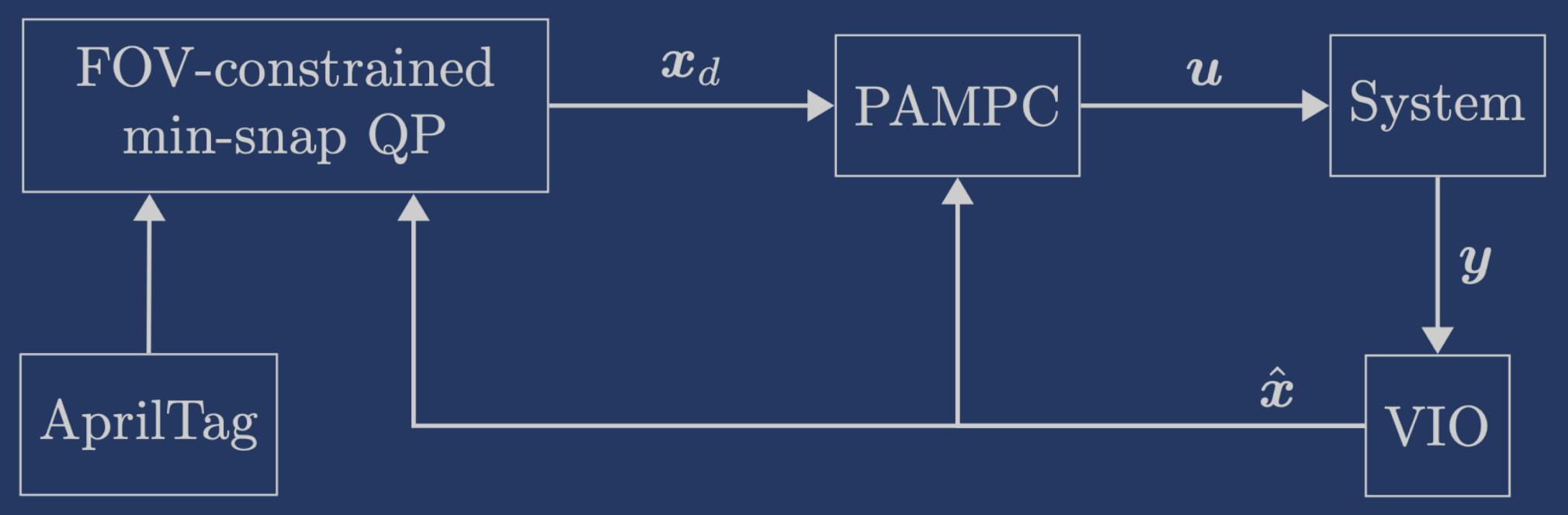

PX4 is used as the low level flight control software which converts angular rates and thrust to motor speeds. During the landing phase (i.e. after the monocular camera spots the landing pad), angular rates and thrust are computed using a perception-aware model-predictive control (PAMPC) method [1] (implemented by ACADO Toolkit). PAMPC is used here to track a trajectory while trying to keep the landing pad center in the middle of the monocular camera’s view.

The landing trajectory tracked by PAMPC is generated using a min-snap quadratic program (QP) [2] (implemented in vioquad_land). FOV restrictions were designed and included as linear constraints in the min-snap QP, generating a trajectory that keeps the landing pad in the camera’s FOV. The landing pad is equipped with an AprilTag marker, and the AprilTag 3 algorithm [3] (implemented by apriltag_ros) is used for relative pose estimation between the quadcopter and landing pad. Finally, ROVIO [4] is used as the VIO algorithm to estimate the quadcopter pose and velocity (implemented by rovio).

Experiment

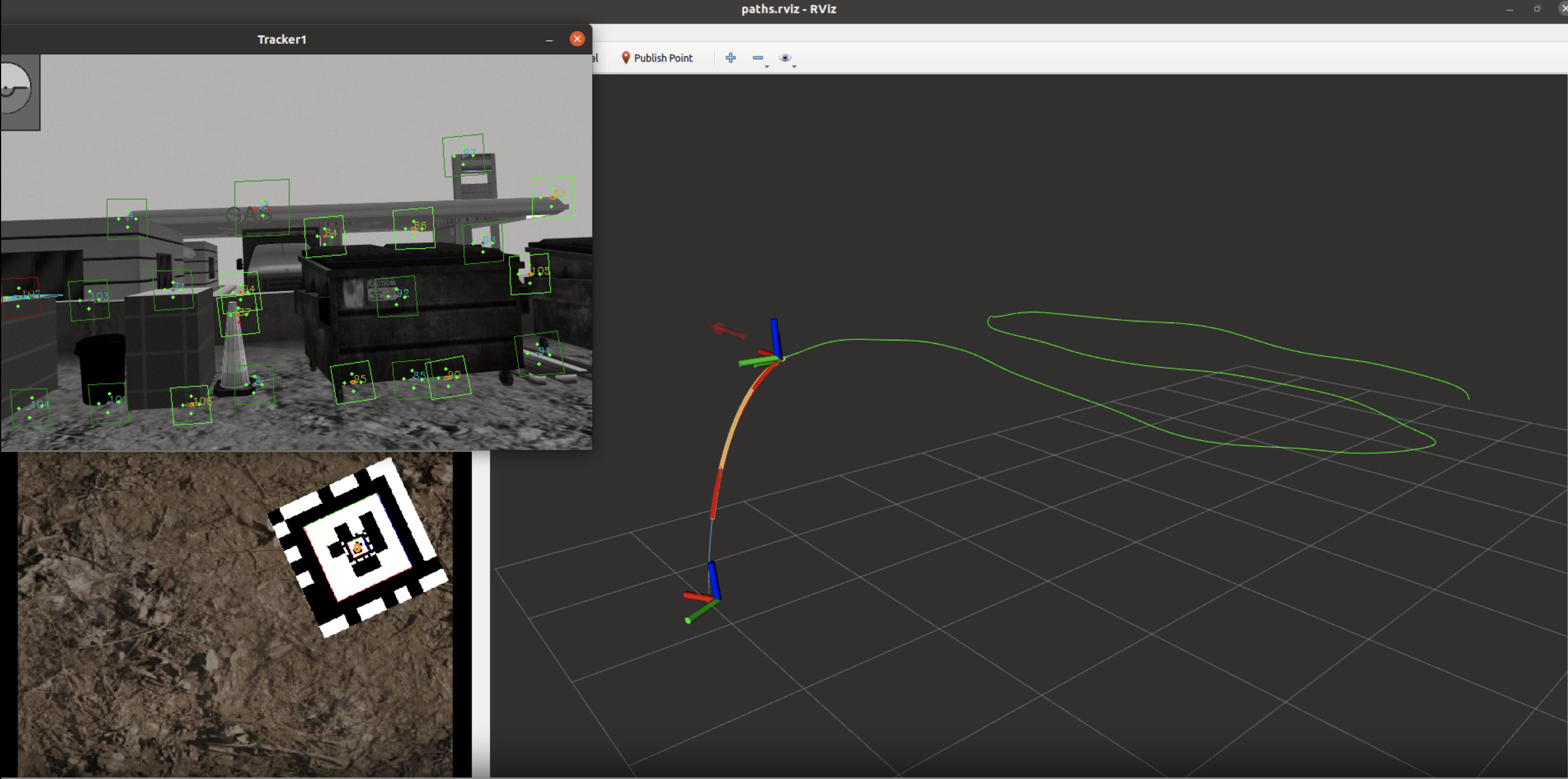

Experiments were conducted in simulation (Gazebo classic) and in the real world to test the algorithm.

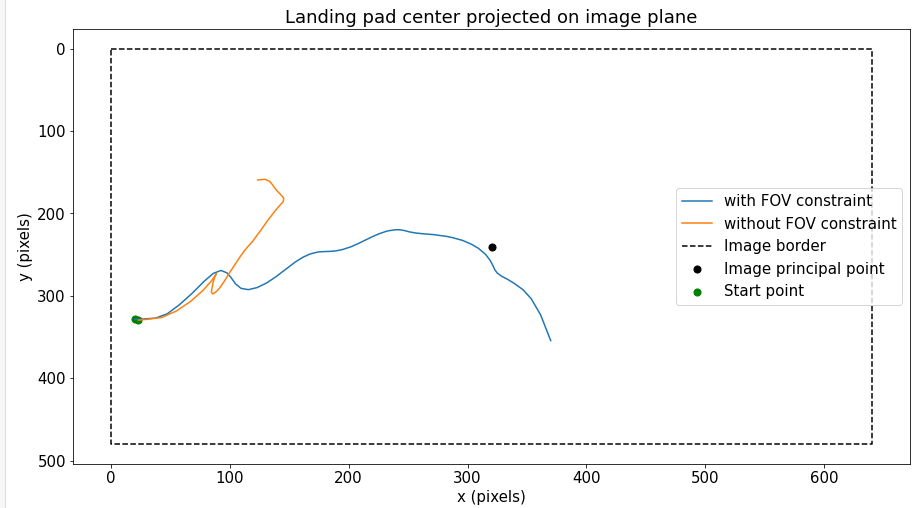

Results

We can see that the FOV constrained trajectory allows the projected landing pad center to get closer to the image center when compared to the experiment without FOV constraints.

References

[1] D. Falanga, P. Foehn, P. Lu and D. Scaramuzza, “PAMPC: Perception-Aware Model Predictive Control for Quadrotors,” 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems

[2] D. Mellinger and V. Kumar, “Minimum snap trajectory generation and control for quadrotors,” 2011 IEEE International Conference on Robotics and Automation

[3] M. Krogius, A. Haggenmiller and E. Olson, “Flexible Layouts for Fiducial Tags,” 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems

[4] M. Bloesch, M. Burri, S. Omari, M. Hutter, R. Siegwart, “Iterated extended Kalman filter based visual-inertial odometry using direct photometric feedback,” The International Journal of Robotics Research. 2017